On November 18, 2025, a major disruption rippled across the internet when Cloudflare—one of the world’s most widely used internet infrastructure providers—experienced a significant service outage. Because Cloudflare supports millions of websites with DNS, CDN caching, traffic filtering, API routing, and security layers, even a single malfunction can create global-level instability. And that’s exactly what millions of users witnessed that day: slow loading pages, apps refusing to open, authentication failures, and entire platforms temporarily unreachable.

While outages are not new in the digital world, this one stood out because of how deeply Cloudflare sits in the modern connectivity pipeline. In many ways, the incident offered a real-world reminder of how much of the internet relies on a few key infrastructure players.

What Triggered the Outage?

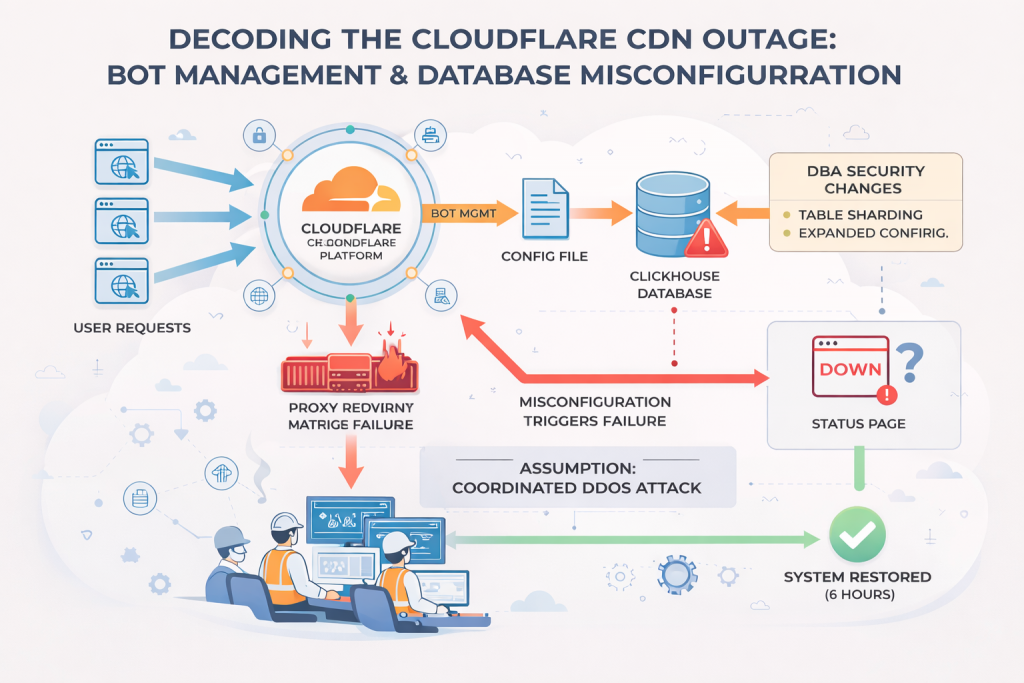

According to Cloudflare’s engineering investigation, the root cause was a faulty internal configuration deployed to part of their network. This configuration affected a critical service responsible for evaluating incoming requests and filtering harmful traffic. When the service encountered the corrupted configuration file, it failed in a “closed” state—meaning instead of allowing traffic through when uncertain, it blocked it.

This resulted in a surge of HTTP 5XX errors, especially 500 and 503 responses, which are typical indicators of server-side failures. Users around the world soon realized that websites using Cloudflare were timing out, stalling, or redirecting to error pages.

Unlike outages caused by connectivity failures or cyberattacks, this event was self-inflicted by a software deployment that didn’t behave as expected. Small configuration issues can have an outsized impact in distributed systems, and this incident highlighted how even a single misconfiguration in a shared global network can cascade quickly.

How the Outage Spread

When Cloudflare pushed the problematic update, only part of the network received it at first. This meant some nodes were functioning normally while others were rejecting legitimate requests. As traffic routing systems attempted to balance loads across these nodes, users received unpredictable experiences—one moment a service worked, the next it failed.

This “flapping” behavior created instability until Cloudflare engineers isolated the issue, rolled back the configuration, and restored normal operations. Because Cloudflare’s infrastructure is interconnected, the rollback had to be done carefully to avoid further disruptions.

The outage lasted several hours for some regions, though others recovered sooner depending on local routing and DNS propagation.

Real-World Impact on Digital Services

The disruption was widely felt because Cloudflare isn’t simply a website host—it acts as a performance booster, security layer, and traffic gateway for businesses large and small. Popular platforms relying on Cloudflare for DNS or CDN saw sudden performance drops. E-commerce portals, SaaS dashboards, streaming services, and even mobile apps that depend on API calls struggled to function.

For many businesses, this meant:

- customer logins failing

- checkout pages timing out

- dashboards unable to load

- latency spikes or complete service outages

Even organizations that had redundant hosting infrastructure were affected if they used Cloudflare for routing or DNS resolution.

What This Outage Reveals About Internet Infrastructure

1. Centralized infrastructure creates shared risk

Although Cloudflare increases performance and security for millions of websites, the outage showed how a single point of failure can disrupt a huge portion of online activity. Centralization offers efficiency but also creates vulnerability.

2. Redundancy must extend beyond cloud hosting

Many companies invest in multi-cloud or backup environments, but rely on a single provider for DNS or CDN. The outage highlighted why redundancy must cover every layer of the stack, including traffic routing and security services—not just compute resources.

3. Configuration management remains one of the hardest challenges

Modern cloud platforms are highly automated, but that also means configuration errors can spread extremely fast. Even with safety checks, edge cases slip through. The best protection is layered testing, staged rollouts, and real-time anomaly detection.

How Cloudflare Responded

Cloudflare acted quickly, pausing the rollout and identifying the failing component. Engineers issued a rollback, restarted affected services, and published a transparent incident update. Their communication emphasized that the issue was not caused by an attack, but by an internal logic failure during deployment.

The company also announced improvements to configuration validation, emphasizing stronger safeguards to prevent similar issues in the future.

Moving Forward: What Organizations Can Learn

The November 2025 outage is a valuable case study for businesses that rely heavily on cloud-based traffic tools:

- Implement multi-CDN strategies where practical

- Use secondary DNS services to maintain accessibility

- Design fail-open mechanisms for internal services that filter or inspect traffic

- Monitor end-to-end user experience, not just internal server metrics

Most importantly, organizations must acknowledge that outages are inevitable. The real question is how resilient the architecture remains when a critical provider becomes unavailable.

The Cloudflare outage of November 18, 2025 was a reminder of the complexity and fragility of the internet’s backbone. While Cloudflare resolved the issue efficiently and transparently, the event highlighted how interconnected digital services have become.

While ISPs cannot protect themselves from technical issues with the Internet, they can definitely adopt tools that strengthen operational visibility and fault management. Jaze ISP Manager provides powerful helpdesk and task management features to help teams detect and respond to internal outages faster.